Working methodology of the OSuR

The Open Source University Ranking (OSuR) is made based on the experience of professionals in open source software and in developing university rankings and in the study of rankings globally

The OSuR is based on measure a series of qualitative and quantitative criteria for the spreading of open source software in each college, which are synthesized into a single value called OSDI (Open Source Diffusion Index) that facilitates comparison between universities. OSDI is a standardized, single metric that values the dissemination of open source software from an organization

Stages of development

1Contact with experts in open source software and in university rankings development to provide technical support. The working team it's defined.

2Theoretical analysis of literature, events, research and divulgation of open source software to know which are the key aspects to rate it. The sources are cited in the bibliography.

3Layout of indicators based on phases 2 and 3. Each indicator is given a weight according to its importance respect to the objective: divulgation of open source software.

4Peer reviewed. Through a pairs review process similar to what is done in scientific publications, the expert contributors team defined in Phase 1 evaluate the quality of the proposal and suggest improvements.

5Testing of indicators to ensure that they measure the most important aspects regarding the purpose of the ranking, based on objective and reliable information. And that have enough data collected to use. Those who fail the the test are redesigned.

6Empirical analysis, interviews with universities and gathering of public information about them.

7Statistical analysis using a normalization to the data in order to be able to compare. And getting the key value: OSDI index.

8Publication of results. A classification table of universities that summarizes their effort to divulgate the open source software.

Definition of indicators to assess

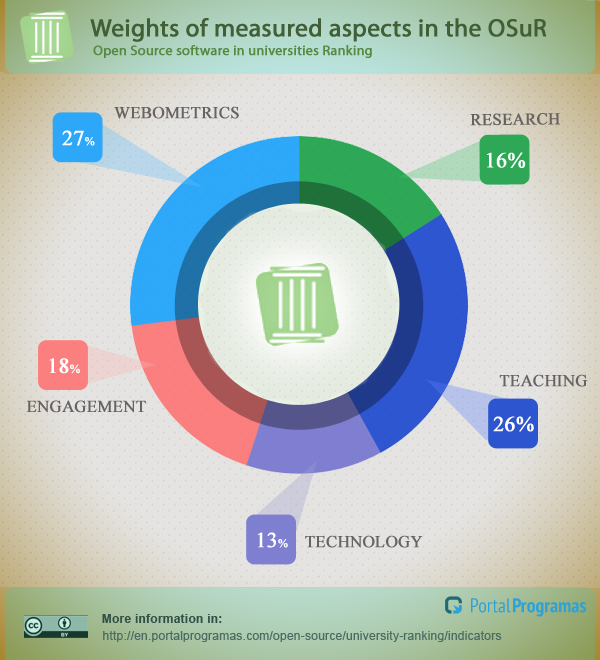

The experts have evaluated the criteria and have determined the weights of each one. These indicators have been grouped into a number of dimensions that are detailed below:

Do you want this graphic on your site? Copy and paste the code:

Based on information gathered about universities in interviews with universities and in the opinion of several experts, a choice of rating criteria is performed. To each one is given a weight according to its importance and are grouped in order to assign a weight to each group.

How to Interpret results

The result is a ranking of universities based on its Open Source Divulgation Index (OSDI). This indicator is a value between 0 and 100 that serves to see the effort of universities to divulgate open source software, and to compare them. Allows to compare universities regions ...

On the other hand, the value of the criteria provides information about specific aspects to improve and about these that performs well: suggest is working a college in that specific aspect regarding all others, where '0 'represents that it does the same as the average. The closer it gets to -4 the worse, and the closer it gets to +4 the best. More statistical information in the analysis.

Statistical methods used

Normalization of results

The criteria measured are not comparable directly because it's very heterogeneous information. In order to compare it we use the z score that allows us to reduce all the variables analyzed on the same scale and to compare them, or in this case, add them. This is a value that is already used in other rankings.

The z-score is a statistical method that specifies how a value distances away of the average. Is a number in >the range of [-4, 4] that when it's greater than 0 indicates that the value is above the average, and when less, indicates that it's below. Is calculated by subtracting the population average to the specific value, and dividing by the standard deviation:

Importance of the criteria

Each criteria has a different importance respect to the objective of the study. This importance is a subjective value. The same criteria may have different importance for each person, and still be perfectly valid. And depending on the importance of each criteria, the final classification could be significantly different.

Measures have been taken to minimize the error of taking as a reference the subjective values of one or more individuals. A standardized process has been followed so that assignment was as objective as possible:

- A group of independent experts have reviewed about the importance of each criteria

- Universities, as a party involved in the study, are also able to give their opinion.

- The result is a weight for each criteria, agreed among experts and universities themselves

Final Score: OSDI index

The final score is an index called OSDI (Open Source Software Divulgation Index). It's a value that indicates the effort made by universities to divulgate the open source software, regarding to which it diffuses more.

The first classified has an OSDI out of 100 (it's the one that most spreads it) and the rest a value between 0 and 100 in proportion to the promotion they do of open source software. This makes it simple:

- Compare what's the real effort of the universities in this area: the more their OSDI approach to 100, the better.

- Compare universities to each other: As the OSDI is a percentage value is easy to measure the difference between them.

Why is not so important the position at the chart? Because the ranking position does not show the actual distance between them considering the purpose of this study. What matters is the effort made, and that is measured by comparing their OSDI indexes. That is, there may be much difference between the spread of open source software that make universities occupying positions 1-5, and instead there is very little difference between the universities of the 10 to the 20. Therefore, the OSDI index is useful: it help us to measure the difference of divulgation that makes that university regarding which more spreads it.

There are other methodologies that have been considered and that we explain.

Highlights

1

Based on studies and other recognized rankings

For the development of this project studies on indicators to measure have been reviewed, also various mathematical analysis (as z-score) and other similar rankings. Most of the works considered have been developed in the field of bibliometrics: criteria and aimed at measuring development in university research analysis. Other rankings rank universities according to their published research in international journals, or its quality as an institution.

While the mission of this ranking is very different, the methodology is very similar and the study of their strengths and weaknesses has been very useful in defining the methodology of this ranking.

2

Results transparency

One of the mainstays to boost knowledge in society is to consider information as a public asset and offer it freely. So we think that the job is not finished when the results are published. Provide free access to the collected data is an important part of the work as it helps to promote knowledge in society because it allows reusing and extract more information. Moreover, allow to validate the methodology and results obtained. That is the purpose of the Open Science initiative that we followed when preparing this report.

Therefore, all results about universities are offered freely so that other studies can use it and extract more information.

Other methodologies

Classification

There is another way of classifying universities that has also been exposed. A methodology that criticizes the classification of universities granting a fixed weight to each criteria because the weight of the criteria may be different depending on the mission / vision of the university, as well as the objectives of each ranking user. Therefore, the principles of Berlin recommend that there be a prefixed rating and that the user is who can indicate the weight of each criteria, and so obtain a personalized classification.

Most college rankings choose the classification that follows The OSuR, but there are rankings that follow this alternative methodology. More information on the methodology of each ranking in the literature.

The Ranking of Universities in open source software chooses the assignment of weights to the criteria for defining a standardized methodology that analyze them under the same parameters. This definition is made through a process of peer review by a group of experts in the field. Thus, it is possible to perform further statistical analysis as a biplot multivariate analysis.

Download and use of open source software at university

Why is the use of specific open source apps not measured?

Is not measured, because while knowing the number of open source apps that are used can be interesting, in practice it's difficult to obtain this value as it's almost impossible to measure it reliably. Many people have commented that in their faculty or in a particular subject some open source software is used, not proprietary antivirus as Nod32 , or Windows Live Messenger, Word, Winrar ... but rather its open alternatives, and that it was not taken into account in this ranking.

The problem lies in the fact that using open source software in a particular place barely influences on global calculation. A university is a very large place, with lots of faculties, subjects, teachers, courses ... for this factor be taken into consideration we would have to review all apps used in college computers. That's a a very detailed information and rarely could be found exactly. For that reason, it was considered that this approach was not reliable enough to be part of the ranking and therefore was dismissed.